Leverage AWS Services and CDK to Build an Amazing Read4Me App!

In this article I will show you how I built a fully serverless project using AWS StepFunctions product to orchestrate all the flow

Hey everyone! Today I want to share with you this project I've been working on during my OOO days (yes, this is how I like to spend my free time, don't judge me). I wanted to improve my AWS experience by building some real-like architecture so I googled "aws real projects" and I found this video describing an interesting text-to-speech system. Therefore I got down to work!!

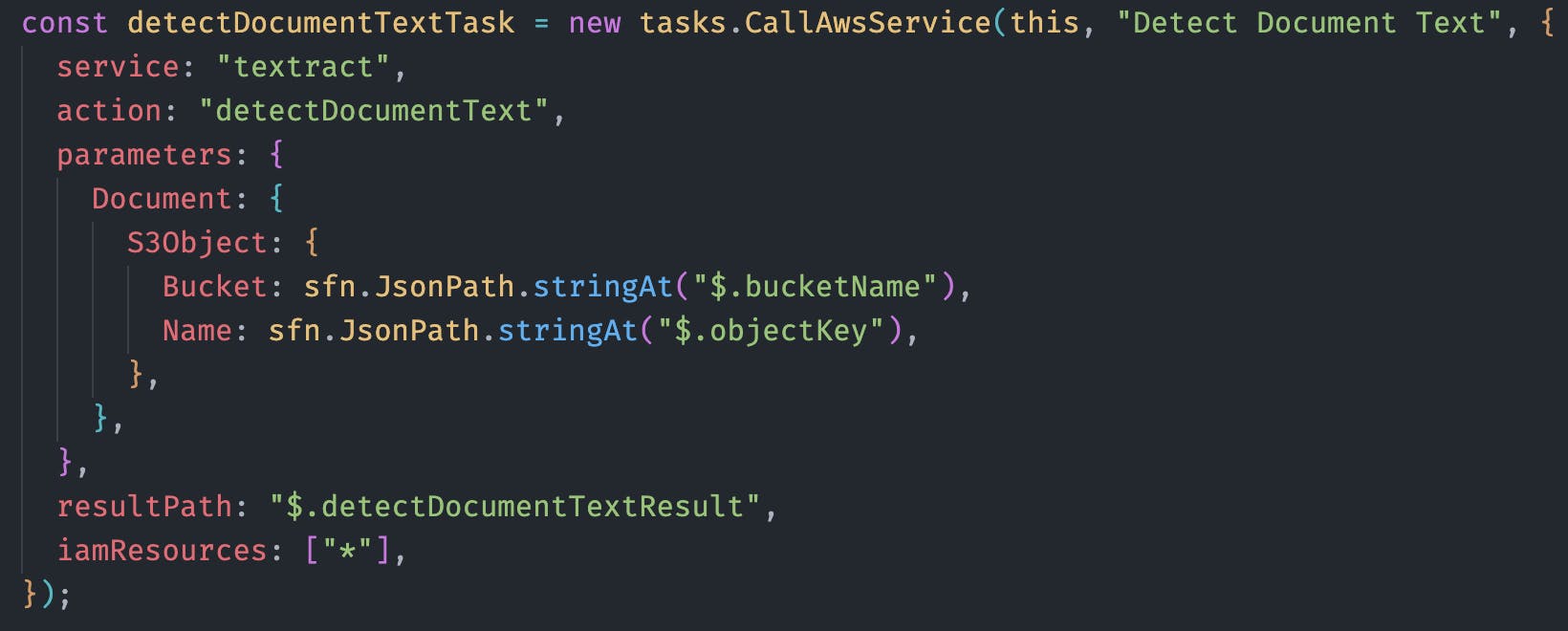

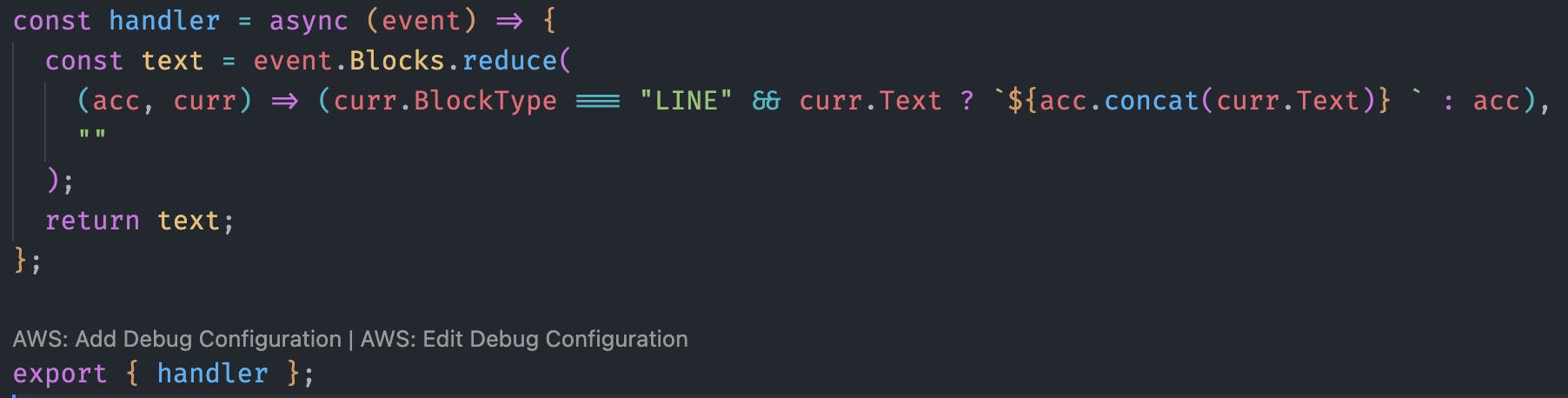

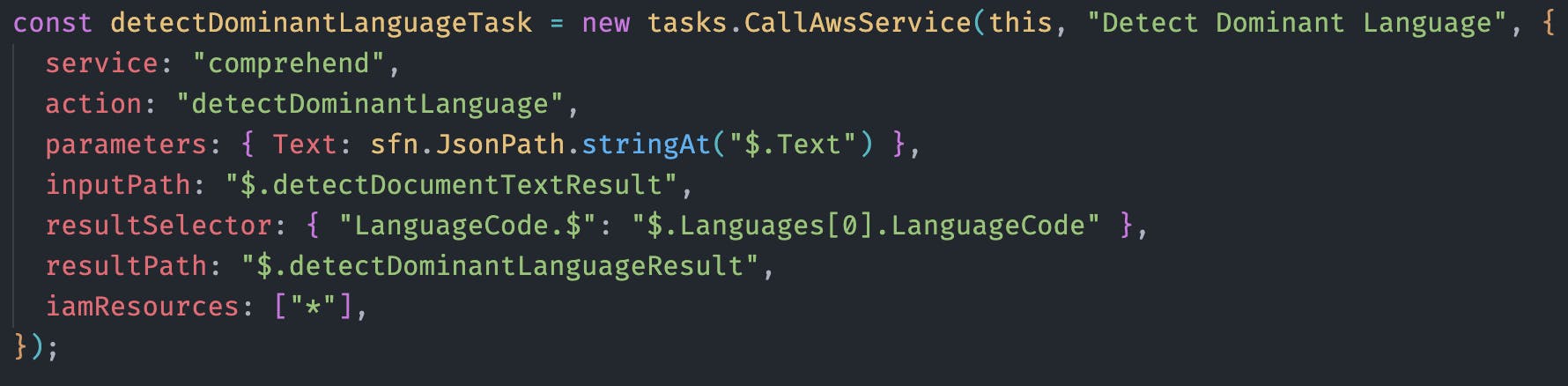

I used TypeScript Cloud Development Kit (CDK) to implement all this architecture. I've attached some screenshots to show how I create some resources using Infrastructure as Code, as well as some Lambda functions code. You can find all the source code here: github.com/davidivad96/read4me (leave me a star ⭐️ if you find it useful).

✨ The project: Read4Me

Read4Me is a system that enables the visually impaired to hear documents. By leveraging multiple AWS IA services such as Amazon Textract, Amazon Comprehend and Amazon Polly we can extract the text from a document, detect its dominant language and transform it to an audio file. This is very helpful for persons who have a visual impairment, but also for lazy people that don't feel like reading and want to just lay down and listen (I admit it, I'm one of those).

AWS Services used:

StepFunctions: Serverless orchestration service that lets you combine multiple AWS services to build business-critical applications.

S3: Object storage service.

Lambda: Compute service that lets you run code without provisioning or managing servers.

Textract: IA service that lets you add document text detection and analysis to your applications.

Comprehend: IA service that uses natural language processing (NLP) to extract insights about the content of documents.

Polly: IA service that converts text into lifelike speech.

Amplify: Framework that enables developers to develop and deploy cloud-powered mobile and web apps. It is used to deploy and host the frontend.

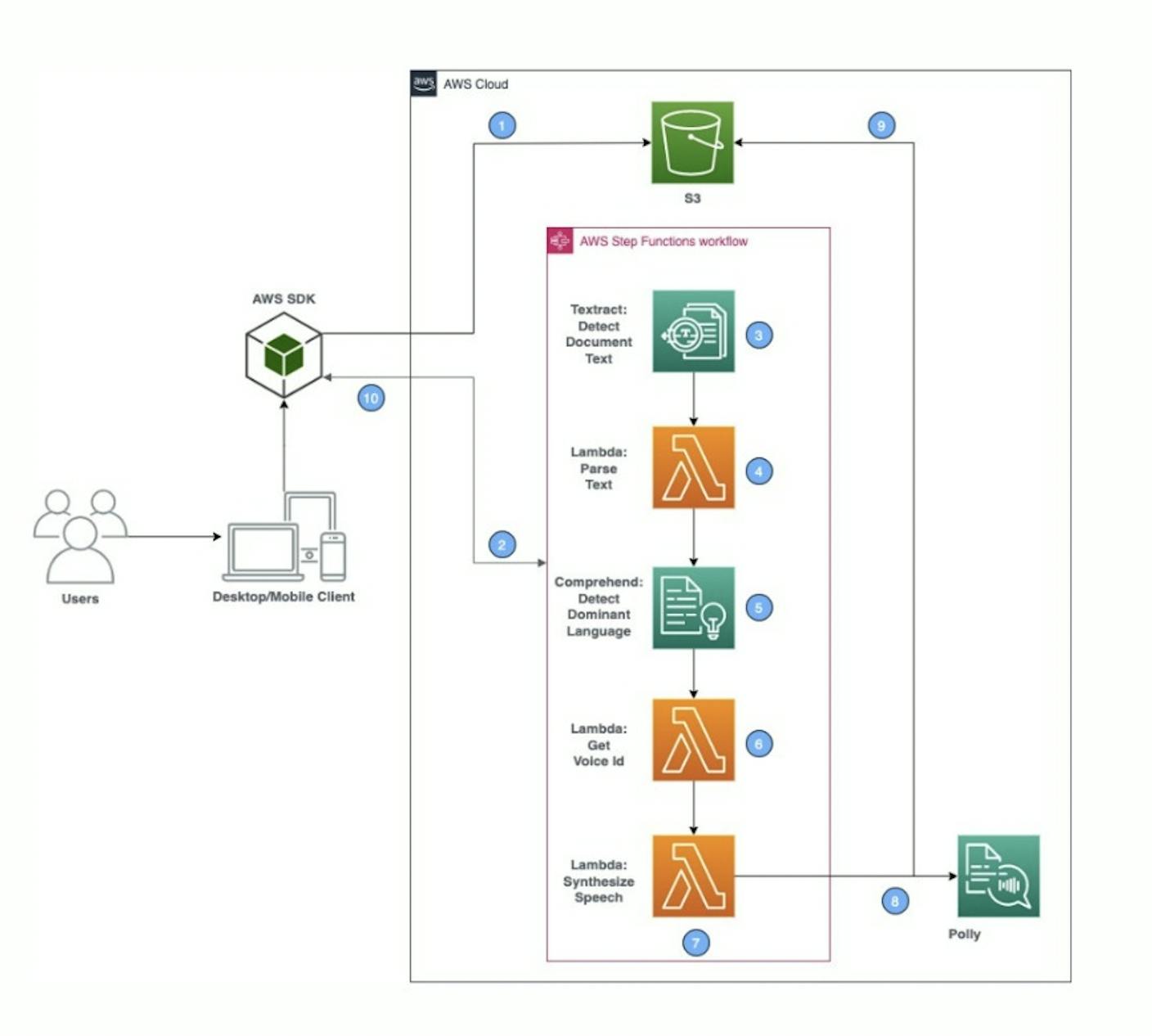

🏗 Architecture

⚙️ StepFunctions State Machine

The core of the architecture is the workflow implemented by AWS Step Functions. This is where all the magic happens 🪄.

📖 Step 1: Extract Text

Amazon Textract is an amazing AWS product that allows you to analyse and extract text from any document. Well, that's what docs say but it is not true: it only supports JPEG, PNG, PDF, and TIFF files. Don't lie to me, Amazon 😒.

Using its DetectDocumentText API we can take a document uploaded to S3 and detect the text in a synchronous process. It also has an asynchronous way of doing this (usually useful for larger documents) but we want to make things simple, right?

🧹 Step 2: Parse Text

I'll tell you something: the response syntax of the DetectDocumentText API is awful. It's an array of "Blocks" each one containing a line/word of the text and a lot of useless data more. I just want to have a string with all the text detected in the document, is it too much to ask?

Well, that's what we want to do in this step. I implemented a Lambda function which does exactly that: transform ugly blocks in beautiful text:

🇪🇸 Step 3: Detect Dominant Language

Amazon Comprehend is a natural language processing (NLP) service. It can do several things such as syntax analysis, key phrase detection, sentiment analysis or dominant language detection. The latter is the one we are interested in. I mean, who cares about syntax or sentiments? 🤷🏽♂️.

We need to detect the dominant language of the document because we need to use a voice that matches that language. As we will see later, Amazon Polly supports a lot of different voices. And those voices speak in a specific language. You could try to read an English document with a Brazilian voice but that probably won't work out well (or maybe yes, who knows).

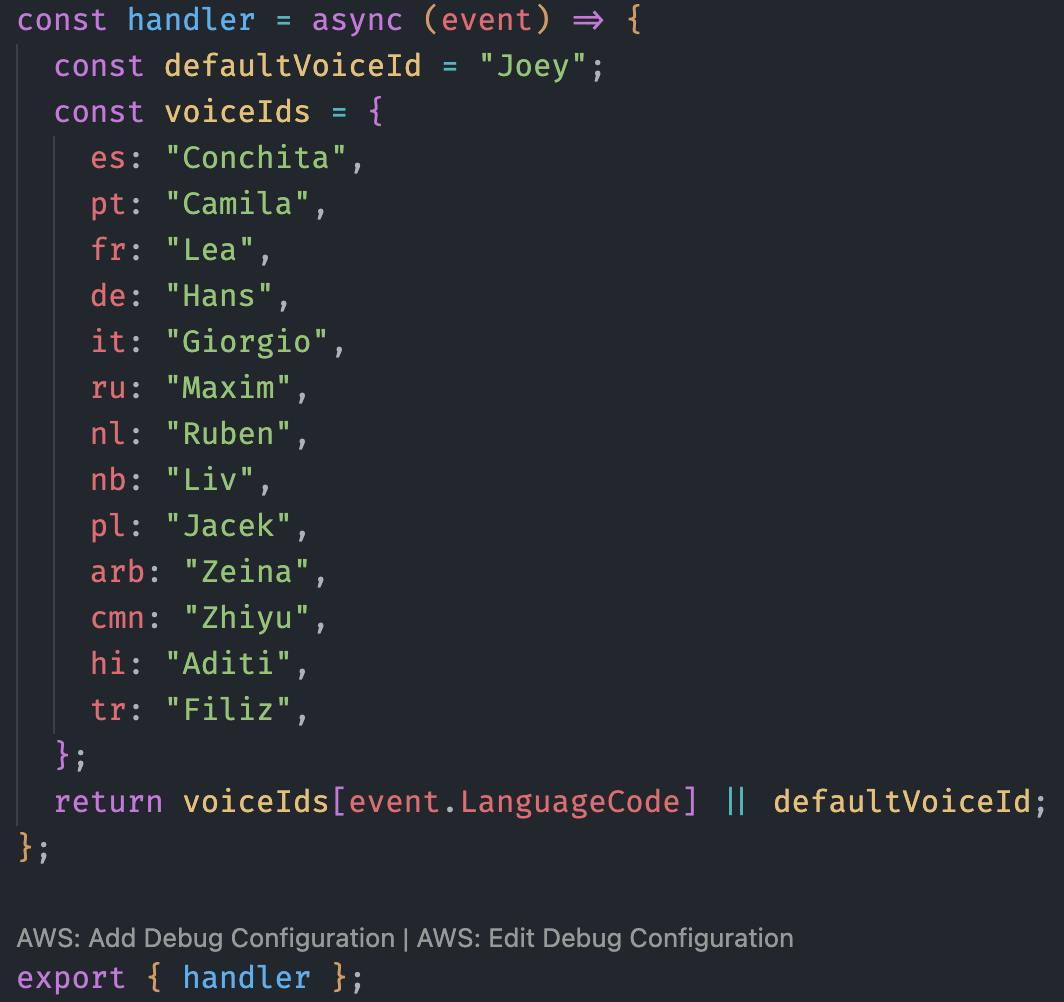

🗣 Step 4: Get Voice Id

This is just a Lambda function which transforms the detected language into a voice supported by Amazon Polly. Not much more to tell here.

🔉 Step 5: Synthesize Speech

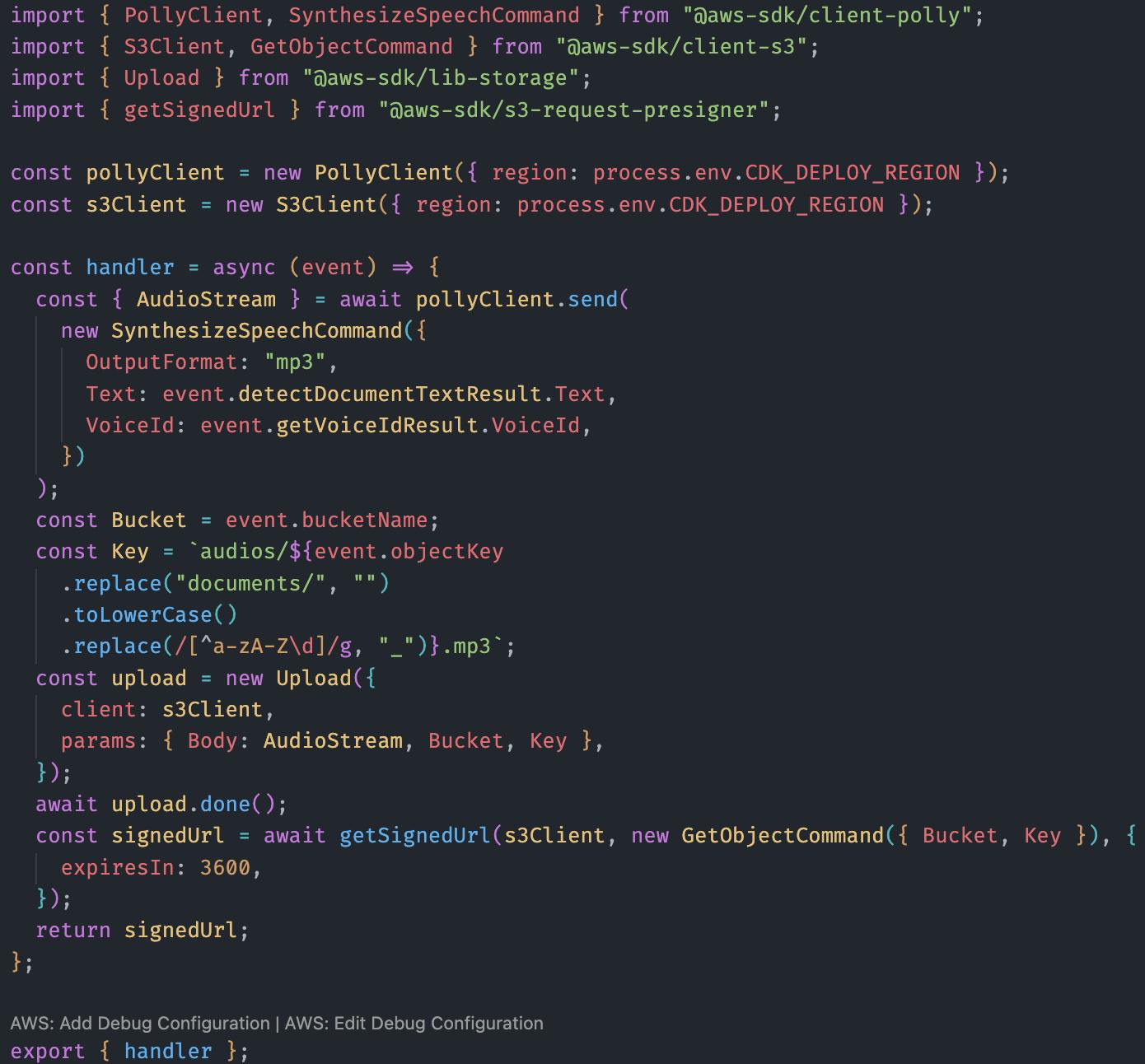

Finally we need to synthesize the speech from the extracted text using the chosen voice. We use Amazon Polly which is a Text-to-Speech (TTS) cloud service. For this final step we implement a Lambda function which does a few things:

Use Amazon Polly

SynthesizeSpeechAPI to get a ".mp3" file with the resulting audio.Upload the audio file to the same bucket where we first uploaded the document.

Create and return a pre-signed URL for this file. This is useful so that the frontend can easily access to the ".mp3" file.

🌐 Frontend

The frontend is a basic React app hosted and deployed to AWS Amplify. It allows the user to select a file, upload it to the S3 bucket and get the response from the state machine. Then the text is displayed and reproduced by an audio player. Cool, right?

If you want to try this app just visit https://main.dkzj57darqtkx.amplifyapp.com. I know it's not the most attractive website in the world, my design skills are null and void. But give it a try 🙃.

💡 Conclusion

In this article we saw how to orchestrate a completely serverless app using Amazon StepFunctions amazing product. I've learned a lot about it since I hadn't used this service before, just as the other Amazon IA products. I've also had a great time writing CDK code, I recommend to use Infrastructure as Code whenever possible. Not only allows you to manage your infrastructure in a more efficient and maintainable way, but it is also super fun to code!

I will definitely build more realistic AWS architectures, I believe it's the best way to gain hands-on experience in the cloud. And of course I am open to receive your feedback! Feel free to deploy the stack to your AWS account and let me know your thoughts in the comments section.

If you liked this post, then react to it with a beer please 🍺🤤. Thank you!